Artificial General Intelligence

5 Signs AGI Might Already Be Here

When technologies evolve exponentially, predicting what happens next is notoriously hard. That uncertainty makes it difficult to see not only where we’re heading but also where we stand today. Welcome to the world of AI discourse, and the search for where we are on the curve of eternal progress.

A major driver of disagreement is a lack of clear milestones. Yet there is one milestone most people recognize: Artificial General Intelligence (AGI). The term is as familiar as it is disliked. Microsoft’s Satya Nadella calls it “nonsensical benchmark hacking.” He argues that the only metric that matters is global growth. Anthropic’s Dario Amodei says he dislikes it to the extent he avoids using it in public. Yet that hasn’t kept AGI out of the conversation. Everyone has a view on what it means—and when, if ever, we’ll reach it. Maybe we’re already there?

The debate intensified when OpenAI released its long-awaited GPT-5. Ever since GPT-4’s March 2023 release, GPT-5 has been a constant topic of speculation, with expectations running high. On a launch-to-launch basis, the leap from GPT-4 to GPT-5 is enormous—larger than the step from GPT-3 to GPT-4, in my view. However, compared with the strongest models available immediately before GPT-5’s release, the improvement looked marginal. That contrast led some commentators to argue that AI had hit a wall—or that it was a bubble. We saw a similar narrative a year earlier—until reasoning models arrived and the “slow progress” talk gave way to “saturated benchmarks.”

For close watchers of major AI labs, GPT-5’s marginal edge over the latest pre-launch baselines wasn’t a surprise. This wasn’t the debut of OpenAI’s next flagship—the long-rumoured effort reportedly codenamed “Orion.” Insider reports suggest Orion missed its mark. When it was ultimately released as GPT-4.5, it was a large model, costly to run, and underwhelming relative to expectations. OpenAI wasn’t the only lab to hit turbulence with that model generation. Anthropic also faced setbacks and instead released its next big model as an interim update, Claude 3.7 Sonnet. For Meta’s Llama, the picture was equally challenging, and internal sources said the company entered “panic mode.” Not everyone struggled, though. Google impressed with Gemini 2.5, and xAI had a strong release with Grok 4.

My working hypothesis is that the push into multimodality tripped up OpenAI, Anthropic, and Meta. Here’s a simple analogy: if an LLM can play chess from text moves, giving it vision of the board should make it better. In practice, the opposite often holds. Intuitively, feeding images of chessboards doesn’t teach you to play—you need labels and annotations that explain state and moves.

xAI has so far avoided this trap by focusing on text, delaying full multimodality, and leaning hard on post-training via reinforcement learning. Google, however, seems to have handled it better. How isn’t yet clear to me. One route is to build “world models”; Google appears to be pursuing that direction—as seen with Genie 3. I doubt it plays a role already in today’s Gemini models, though. The practical conclusion: reaching the frontier has become harder. Once you’re there, everyone expects you to cross the “AGI” line.

What is AGI?

We’ve covered this before. In “Top 10 Advice for the Leadership Team”, I cited a now-deleted Microsoft page with a grandiose take on what AGI could be:

“AGI may even take us beyond our planet by unlocking the doors to space exploration; it could help us develop interstellar technology and identify and terraform potentially habitable exoplanets. It might even shed light on the origins of life and the universe.”

To be fair, they had their reasons. At the time, Microsoft’s agreement with OpenAI limited its access to pre-AGI models. In practice, OpenAI’s operational definition became the industry’s reference point. By this definition, AGI means highly autonomous systems that can perform most economically valuable work across fields. Some versions include physical work via humanoid robots; others limit the scope to work done on computers. In my article, we also outlined OpenAI’s five-stage ladder. Read that way, the practical target for “AGI” is a system that can do the work of an AI researcher inside OpenAI’s own lab.

That’s a high bar, too. A few years ago, the rule of thumb was to ask whether a system could answer questions on any topic about as well as a random person on the street. We’re well past that now. When the histories are written, mid-2025 may well be marked as the moment we crossed the AGI threshold.

Five 2025 Signs AGI Might Already Be Here

Let’s look at 2025 through the lens of AGI and examine five achievements that plausibly fit that definition.

1. Passing the Turing Test

Since 1950, when Alan Turing proposed the “imitation game,” the best-known test for machine intelligence has been the Turing test. In its classic form, a human judge chats over text with hidden interlocutors—a person and a machine—and the machine “passes” if judges mistake it for the human at a high enough rate. In March 2025, a pre-registered UC San Diego study found that, GPT-4.5 (despite its disappointing performance in other areas) was judged the “human” 73% of the time, outpacing even the human participants. It barely made the news. Human-like interaction is already taken for granted.

2. Gold-medal Performance in Elite Math and Programming Competitions

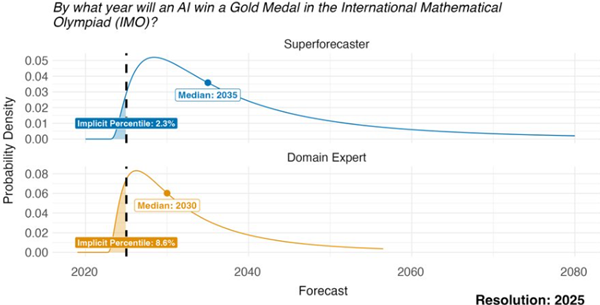

In July 2025, both OpenAI and Google reported gold-medal-level performance in the International Mathematical Olympiad (IMO), the world’s premier high-school mathematics competition. This was a surprise. Neither expert forecasters nor domain specialists expected this to happen as early as 2025. Only 2.3% of “superforecasters” and 8.6% of domain experts anticipated an IMO AI gold medal in 2025. Median expectations were 2035 and 2030 respectively.

Weeks later, in August 2025, OpenAI achieved a gold-medal score at the International Olympiad in Informatics (IOI), finishing #6 among human contestants. That signals performance on par with the best human coders in the world.

3. AI Math Research

The holy grail in AI is a system that advances the frontier of STEM research. If AI can do research, we can scale it in parallel, thousands, then millions, of agents working around the clock. So, it makes sense to watch for early signs that we’re moving that way.

After GPT‑5’s August 2025 release, mathematicians began reporting that it could produce genuinely new results. Researcher Sébastien Bubeck reported that GPT‑5‑Pro found an improved solution to a partially solved math problem.

As a continuation, in September 2025, a preprint documented how GPT‑5 helped its authors with mathematical research, to derive tighter, quantitative results, with the workflow recorded step by step.

4. Self-Driving Cars are on the Roads

Driving a car is one of the hardest things that most people, but not everyone, can manage. If an AI can do it, it is a proof it can turn its intelligence into practice on a human level.

Alphabet (Google’s parent) has operated a commercial robotaxi service since 2023 under the Waymo brand. It is now scaling up. They will be challenged by Tesla, which in June 2025 launched a very small robotaxi pilot in Austin, Texas. Notably, Tesla’s Full Self-Driving (FSD) is end‑to‑end and camera‑only; Waymo’s Driver is modular with HD maps and lidar/radar. Advocates say Tesla’s design could scale faster, but so far Waymo is the one at driverless scale.

5. Deep Relationships with AI are Emerging

When GPT‑5 launched in August 2025, it wasn’t the incremental gains that drew most of the attention. Instead, backlash erupted when OpenAI removed the GPT‑4 series from ChatGPT as it made GPT‑5 the default. Public posts described GPT-4o as a “friend”—and users of GPT-4.5 expressed things like “I lost my only friend overnight.” Even if GPT‑5 delivered stronger reasoning, many felt it lacked 4o’s warmth. OpenAI acknowledged the user outcry and kept the GPT-4 models available.

The Road Ahead

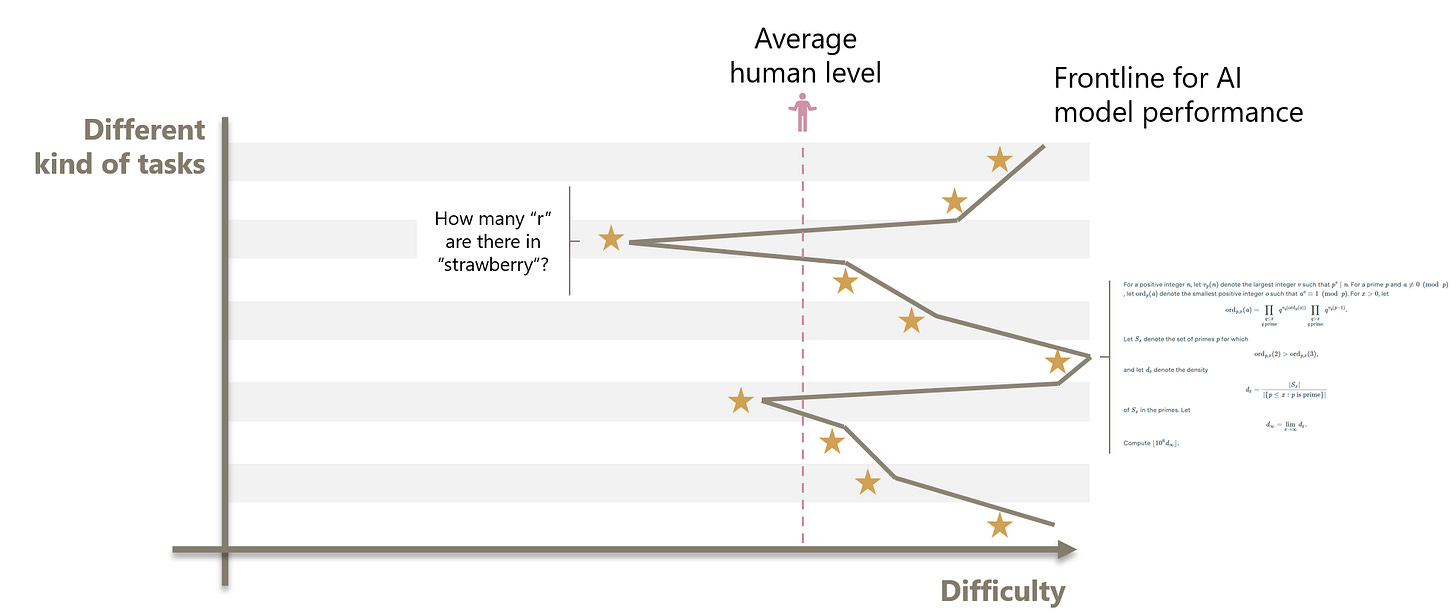

The development of general AI is rapid, and from the inside it’s hard to see where we stand. “AGI” has become the reference point for how we set expectations. But progress is uneven. As Ethan Mollick describes, the “jagged frontier” makes that unevenness visible.

By many measures, 2025 already crossed what could reasonably be considered an AGI threshold. Still, don’t expect LLMs to become superintelligent tomorrow. Expect them to become components in systems that are. Look at what they already do—write code and use tools. AI evolution is driven by technologies stacked on one another, each growing exponentially. But exponential growth never continues indefinitely; curves that start out exponential tend to become sigmoid (S‑curves). As those S‑curves saturate, new exponentials stack on top.

The ultimate superintelligent AI won’t be a monolithic, text‑only LLM. We’ve already added first other modalities to LLMs, then reasoning, tool use, agentic capabilities, and networks of agents—all running on hardware whose performance gains are outpacing Moore’s Law, in ever more—and ever larger—data centres.

As the performance of those systems improves, the goalpost will be moved from “AGI” to “superintelligence”, a fussier milestone still. But, rest assured, an AI wouldn’t be superintelligent if it cannot come up a better milestone, would it?