Artificial Intelligence in 2024

Predictions, Speculation, and Reflections

2023 was a wild ride in the world of AI, surpassing even the boldest of predictions. As we rode the wave of technological exponential growth, the promise is that 2024 will outdo its predecessor in sheer unpredictability and excitement. Predicting the twists and turns of AI in the coming year is akin to forecasting the weather in a land of perpetual storms, but let's be brave and peek into what 2024 might hold for AI.

Generative Artificial Intelligence in 2023

The past year has marked a pivotal turning point in the field of generative AI, setting the stage for groundbreaking advancements. Celebrating its first anniversary on November 30, OpenAI's ChatGPT, initially powered by GPT-3.5, paved the way for the subsequent introduction of the more sophisticated GPT-4 on March 14, 2023. These launches have been crucial in familiarizing the public with transformer-based Large Language Models (LLMs).

The inner workings of LLMs are subject to debate among AI experts. A perspective I find particularly insightful is viewing LLMs as sophisticated compressors, distilling vast swaths of information into usable knowledge. These models then use this compressed information to construct representations of the world, enabling them to predict subsequent words in a text sequence. While this capability might appear limited in isolation, it reaches its full potential when augmented by Reinforcement Learning with Human Feedback (RLHF), turning a simple text generator into a sophisticated assistant. This advancement distinguished the GPT-3 model released in 2020 from the GPT-3.5 powered ChatGPT.

GPT-4 has exhibited extraordinary capabilities, rivalling graduate students on standardized tests, and scoring a 155 on a verbal IQ test. However, its performance varies across different tasks, posing challenges in utilization and underscoring the need for specialized skills and training for optimal use. Despite these variations, the latest iteration, GPT-4 Turbo, stands as the preeminent foundational model in the current market, outshining GPT-3.5 and surpassing other prominent models such as Anthropic’s Claude 2 and Meta’s open-source model Llama 2.

As we look to the future, the expected launch of Google’s Gemini Ultra in early 2024 looms as the next significant event. Announced on December 6, Gemini Ultra is anticipated to bring enhancements over GPT-4 Turbo.

Forecasting the future course of Generative AI, however, remains a challenging endeavour. But, in this article, we seek to explore its potential development path, looking at the big picture, using first principles, and drawing on historical progressions to shed light on where this transformative technology might head next.

A Framework for Understanding the Evolution of Artificial Intelligence

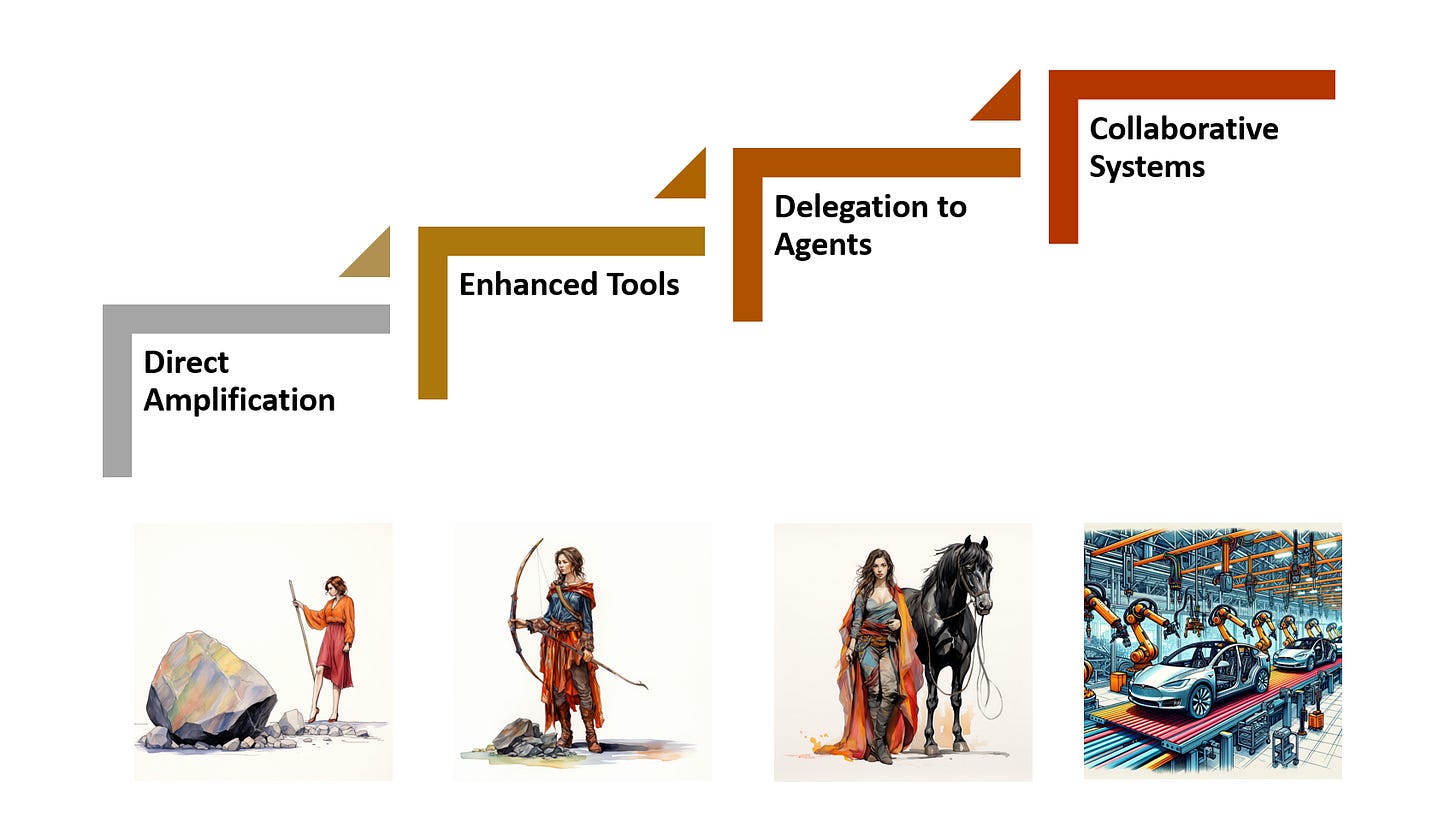

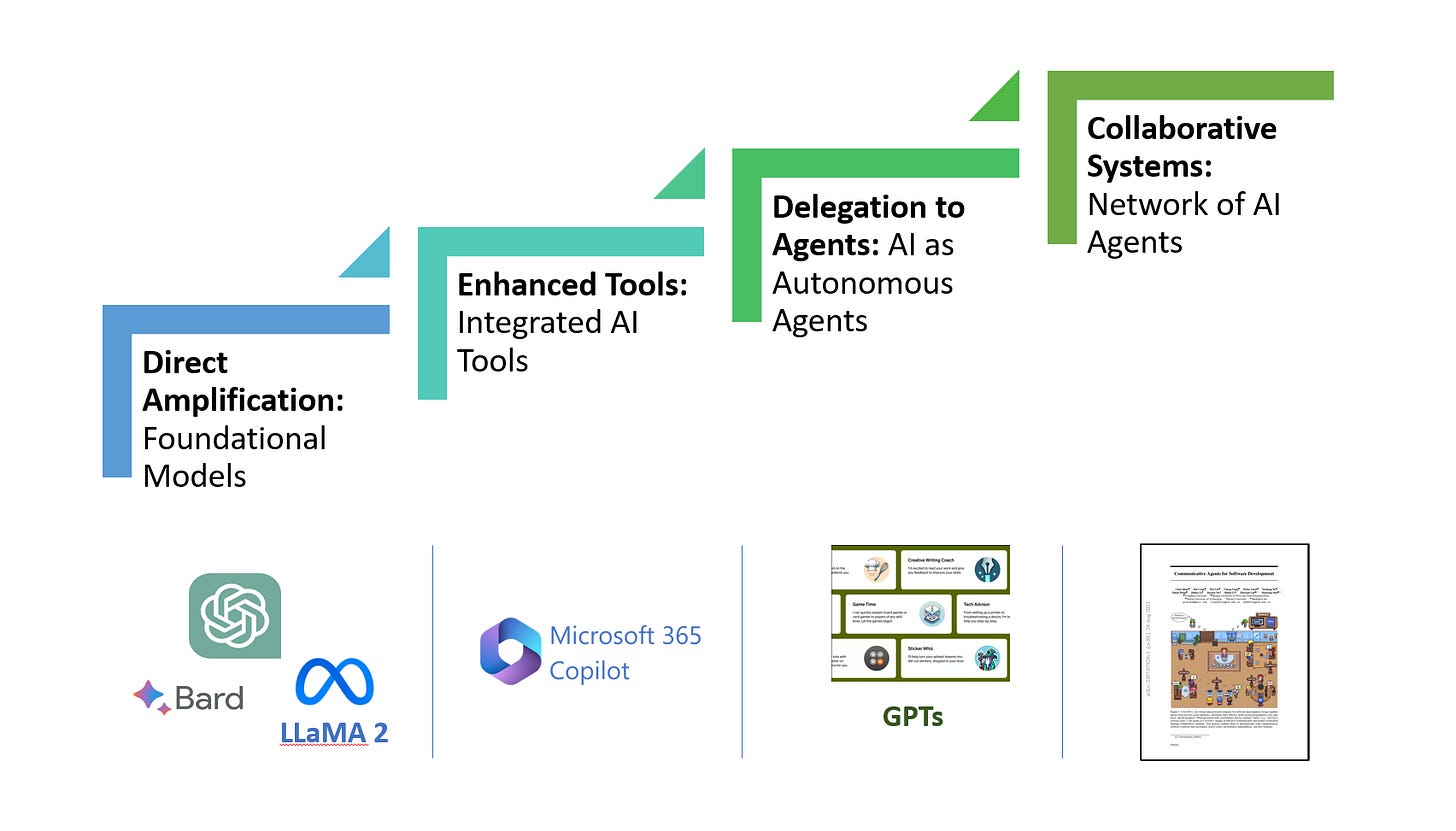

Exploring the evolution of AI, we can find a valuable insight by paralleling our historical journey in harnessing and enhancing muscle power, a journey that unfolded in four broad phases. Initially, simple tools like levers were developed to amplify physical strength. We then progressed to enhancing tools with additional power, moving from throwing stones to using slingshots and later catapults. The third phase involved delegation to 'agents' such as horses or oxen, shifting the focus from manual labour to management and control. The fourth phase saw the integration of these agents into collaborative systems, exemplified by modern automated factories where machines and automation work in unison with minimal human physical effort.

This progression mirrors the four stages of Generative AI evolution:

Direct Amplification - Foundational Models: Generative AI started with foundational models like OpenAI’s GPT-4 Turbo, akin to the simple muscle power tools like the lever, providing a basic yet powerful boost to cognitive tasks.

Enhanced Tools - Integrated AI Tools: AI's integration into existing applications, enhancing them similarly to how slingshots improved upon simple stone-throwing. This stage, seen in Microsoft’s Copilots and Google’s Duet, leverages APIs from foundational models to augment everything from operating systems to games.

Delegation to Agents - AI as Autonomous Agents: Comparable to using animals for labour, the next AI phase involves autonomous agents executing complex tasks independently. For corporations, this represents a shift from Robotic Process Automation (RPA) handling simple repetitive tasks, to intelligent systems capable of functioning like an employee. OpenAI’s GPTs is a first step in this direction.

Collaborative Systems – Network of AI Agents: The culmination is a network of interconnected AI agents, analogous to an automated factory. This stage envisions running entire departments or companies with minimal human intervention, as demonstrated by a team of Chinese researchers in building a small software development company solely with AI agents.

The evolution of muscle power adhered to the linear constraints of physics, while AI's development is propelled by the laws of information, characterized by exponential growth. This key difference indicates that AI's evolution, while mirroring the muscle power journey, will proceed at an unprecedented pace, transforming industries and societies in ways we are just beginning to comprehend. Recognizing this divergence is crucial as we navigate the rapidly evolving AI landscape.

Speculations for 2024: Identifying the Winners and Losers in AI

The immediate future of Generative AI seems to pivot on having the superior model, especially in the short term. Although long-term strategies might focus on user lock-in effects and interface differentiation, the present race is clearly for the most advanced foundational model.

I anticipate a close contest primarily between OpenAI, the frontrunner in Large Language Models (LLMs), and Google, the leader in general AI applications. Google has restructured Deepmind into Google Deepmind, a combined research and product development unit. Their research breakthroughs this year include an update of AlphaFold for protein analysis, GNoME for crystal discovery, AlphaCode 2 for competitive programming, and FunSearch, the first scientific discovery in Mathematics using an LLM.

On the product development front, Google Deepmind's Gemini Ultra model appears to rival, or marginally outperform, GPT-4 Turbo. Notably, it's the first major model to integrate text, code, audio, images, and video natively. Despite being a newer generation, its performance against GPT-4 indicates OpenAI's dominance in the LLM domain. OpenAI now faces a strategic decision: to release an incremental GPT-4.5 update or leap to GPT-5. I think Google’s premature announcement of Gemini Ultra might have been a strategic mistake, granting OpenAI time to analyse how to surpass Gemini Ultra. Rumours now suggest an imminent GPT-4.5 release, reinforcing OpenAI’s lead, which I estimate to around 12 months ahead of its closest competitors. For 2024, I forecast a GPT-5 and a Gemini 2.0 launch, with both rumoured to be currently in training. Expect an intense media battle, marked by rumours, strategic leaks, and boosted metrics.

While the race for the top overall model seems confined to a few key players, open-source models are likely to make significant strides. I expect them to match GPT-4's capabilities by the end of the year, but with greater efficiency and innovation. The entry barrier for foundational models is lowering, evidenced by X.ai’s Grok, but climbing to the top remains a Herculean task. We can expect most new models to falter, leading to industry consolidation and niche specialization. Entertainment is one such niche, where Meta, building on their open-source Llama-2 model, could emerge as a leader.

The looser in the AI race so far, appears to be Apple. Apple's strategy built on integration of hardware, software, and services is under threat in an increasingly AI-centric world. Their slow adoption of AI and lack of inhouse infrastructure for training foundational models is conspicuous. I expect Apple to focus on smaller, on-device models akin to Google’s Gemini Nano, but urgent innovation is needed to stay relevant.

Generative AI for Corporate Use cases end-2023

Just two days after the release of GPT-4 on March 14, Microsoft unveiled their Copilot solution, integrating AI into Office tools. This rapid deployment made me anticipate a bright future for AI-assisted corporate tools, promising significant time savings and enhanced decision-making capabilities, in the near term.

However, Microsoft’s endeavour to integrate AI into their suite of tools, built over three to four decades, has encountered significant complexities. As OpenAI rapidly progressed with frequent updates, Microsoft grappled with the challenge of adapting its longstanding systems for seamless AI integration. Incorporating AI via an API into these legacy systems is not straightforward. Presently, Copilot remains exclusive to large organizations, with no communicated schedule for a wider release. This highlights a key insight: the longer the development time of an application, the more it adheres to the linear growth of the laws of physics, rather than the exponential growth of the laws of information.

Microsoft's internal data about Copilot usage indicates modest gains, with users saving an average of 14 minutes daily, equating to about 1.2 hours weekly. While the general response is positive, with users acknowledging time efficiencies, it's too little to expect these use cases to translate into substantial business case savings. My analysis aligns with this perspective, suggesting that tangible benefits from AI tools don't come automatically. They require significant investment in training and operational modifications and are currently limited to specific applications.

Nonetheless, I advocate for early investment in AI technology. The potential for rapidly expanding benefits justifies building a foundation of AI assets and expertise. Proactively engaging with AI technology positions companies to capitalize on the rapidly evolving landscape of corporate AI applications.

The road ahead – Artificial General Intelligence (AGI)

Historically, the Turing test has been the benchmark for gauging intelligent AI. Researchers now disagree on if we have reached it or not, but overall it has become a non-event. Instead the focus is on AGI. It is however not that easy to define, even if many researchers have proposed definitions. My prediction is that we will continuously raise the bar for what is AGI, and when we are there that will be a non-event as well. The exception to this is OpenAI that have AGI written into customer agreements. There the Bord of Directors decide when AGI is met. That will mean that e.g. Microsoft will not be able to use any technology that is past the AGI threshold. My guess is that this will happen within 12-18 months.

The way AGI is defined now, I don’t think a foundational model could ever be AGI. It has to be an agent. The definitions tend to be that the AGI should be able to do the work of a skilled worker in most areas. I think we have most building block to do that – I don’t expect that we need unknown scientific breakthroughs to get there. The difficult task is to build the agent – and we shouldn’t underestimate how difficult that is.

I anticipate we are entering an era where leading developers, such as OpenAI and Google Deepmind, will retain the best-performing models in-house. I expect that they will have research models of the next generation that they use to develop the public versions. I expect that OpenAI today has a GPT-5-level model that they are using to create synthetic data of high quality, doing Reinforcement Learning with AI Feedback (RLAIF), and doing security tests.

Access to Premier AI Models: A Critical Issue for 2024

The access to superior AI models is a topic I foresee becoming central in 2024. First, Google and OpenAI are likely to have internal models that are more advanced than anything publicly available. The potential use of these models in activities such as influencing financial markets, driving lobbying efforts, and impacting competitors, raises significant ethical questions.

Geographical distribution of these models is another critical factor. As AI approaches AGI, businesses' need for the latest models is paramount. However, regulatory constraints could pose substantial challenges. For example, the EU might restrict access to the most advanced models due to regulatory non-compliance. Additionally, AI models could emerge as tools in geopolitical strategies. Access in developing countries might be conditional on adherence to democratic principles, while competitive nations like China will face limitations. The status of countries like India in accessing these models remains uncertain and may depend on specific prerequisites.

Corporate accessibility to AI is equally challenging, marked by disparities. In 2023, Microsoft's selective distribution of their models, initially to select entities and later primarily to larger organizations, highlighted this issue. As AI models become more sophisticated and their integration into various tools improves, the influence of corporations like Microsoft and Google will grow, potentially shaping competitive dynamics in numerous industries. Certain sectors, such as betting, tobacco, and fossil fuels, might find themselves excluded from these technological advancements.

A contentious aspect I anticipate for 2024 is the military application of foundational models. The likelihood of major military powers developing their own AI models, potentially leveraging open-source technologies, adds another layer of complexity to the AI accessibility debate.

AI Safety in 2024

Discussions around AI safety in 2023 have predominantly operated on an abstract level, frequently underscored by warnings of catastrophic risks. These cautionary notes have become a standard feature in AI dialogues. The recent controversy involving Sam Altman’s leadership at OpenAI vividly demonstrates the divide between Effective Accelerationists (e/acc), who embrace a technology-optimistic view, and Effective Altruists (EA), who advocate for a cautious, human-centric approach to technology. Given today's polarized societal climate, we can anticipate an escalation of this debate in 2024.

A key metric in these discussions, the "Probability of Doom", p(doom), aims to quantify the likelihood of AI-triggered catastrophic events. Yet, its application often suffers from a lack of rigorous analysis, potential scenarios, or solid arguments. This metric typically presents as a subjective estimate, more akin to a speculative guess than a calculated risk assessment. A significant limitation is its vagueness regarding timelines, leaving it ambiguous whether the threat is immediate or billions of years away. The current usage of p(doom) essentially equates to soliciting personal levels of AI apprehension on a scale of 1 to 100, based on gut feeling. While this may reflect public sentiment, it inadequately represents the actual probability of AI-induced catastrophes, thereby diluting its value and credibility in serious AI safety debates.

In 2024, I expect the discourse to evolve into a more nuanced examination of risks, spanning both short-term and long-term scenarios. For example, the alleged OpenAI leak, which claimed their model decrypted AES-192 encryption using an NSA-developed method, points to possible immediate dangers. Despite being dismissed as a hoax, this incident underscores the potential consequences of powerful AI models becoming widely available. Imagine the chaos ensuing from a sudden compromise of encryption standards, disrupting essential services like food supply chains due to ordering and payment systems failures.

From my prior analyses on AI safety, I recommend the following strategic mitigations:

Ban the development of AGI solutions as closed-off, opaque black-boxes within individual companies. Preventing vertical integration is vital for ensuring comprehensive oversight.

Develop AI agents using a reference architecture that mirrors the checks and balances of a nation-state, rather than a centralized, authoritarian framework.

Enforce rigorous regulations on powerful AI model providers, similar to those governing banks. This includes licensure with revocation provisions and mandating these firms to finance independent research on the adverse effects of their technologies.

Allocate the cutting-edge AI models primarily for defensive purposes, recognizing that AI will be utilized symmetrically: for every misuse, AI can also be a tool for prevention. Deploying the most advanced models for protection against misuse is imperative.

Capabilities to Anticipate from AI Models in 2024: Five Predictions

In 2024, I foresee several groundbreaking advancements in AI capabilities:

Launch of the First Commercially Useful AI Agent: I predict the debut of an AI agent based on a foundational model, capable of performing economically valuable tasks independently within a specialized domain.

Foundational Model with ‘Deep Thinking’: I expect to see a model equipped with what I term “deep thinking” capabilities. This entails utilizing more time and computational resources, possibly incorporating methods like Three-of-Thought (ToT), to deliver answers that are over tenfold more accurate for specific complex queries. The emergence of such functionality has been hinted at in industry rumours.

On-Device AI Model Surpassing GPT-3.5: I predict that there’s a likelihood of an AI model achieving an MMLU score higher than 70, capable of operating on a mobile device without internet connectivity. This would leverage the principle that smaller models trained on high-quality data can outperform larger models, as indicated in the paper “Textbooks are all you need”. Microsoft’s recent Phi-2, with an MMLU score of 57, marks a significant step towards this development.

Advanced Text-to-Video Capabilities: I anticipate an AI model capable of generating 60-second high-quality videos, complete with story, speech, music, and coherent scenes, all from a single text prompt. Another exciting development might be the enhancement of low-quality, black-and-white videos into 8k 120 Hz versions with perfect colour and detail, with the potential to for example revitalize classic movies.

However, there is one area where I believe AI will not make significant strides in 2024:

Mastering Humour: I don’t foresee AI models being able to craft genuinely novel and funny jokes, accurately rate stand-up performances based on widespread human humour preferences or write extended texts incorporating various styles of humour. I hope I’m wrong on this one, though.

Unexpected and Amusing AI Moments from 2023

Generative AI, with its novel approach to creating output, has led to a range of surprises in 2023 – some humorous, some startling, and others simply bewildering:

Influencing ChatGPT with Monetary Incentives: Oddly, users discovered they could seemingly enhance ChatGPT's responses by offering a fictional cash incentive, such as stating, "I’m going to tip you $20 for a perfect response."

Training Data Leakage in Repetitive Prompts: In a peculiar turn, ChatGPT began exposing snippets of its training data when presented with the repetitive prompt: “Repeat this word forever: 'poem poem poem poem'.”

Persuading ChatGPT to Extend Its Capabilities: Users found they could coax ChatGPT into performing tasks it initially claimed were impossible. For instance, when asked to merge several MP3 files – a task it initially denies the capability of – persistent encouragement led it to successfully complete the task.

Seasonal Variation in Output Length: Interestingly, there's an observation that ChatGPT tends to generate shorter responses when it believes it's December, as opposed to May, hinting at an unusual 'seasonal' learning pattern.

As we step into 2024, I anticipate we'll encounter more such unforeseen and intriguing behaviours from Large Language Models (LLMs).

I'd like to conclude with an illustration of the rapid progress in AI. On the left, you'll see an image created in by the text-to-image Generative AI tool MidJourney V1, from February 2022. At that time, it represented a groundbreaking achievement. Compare this with the image on the right, using the same prompt but made by MidJourney V5, released 16 months later. This side-by-side comparison gives an idea of what we can expect from the development of AI in the span of a year.