Artificial Intelligence in 2025

Reflections, Predictions, and Some Wild Speculation

We expected 2024 to be a crazy ride. In a field driven by exponential growth, you’d assume each year would feel increasingly insane. And sure, 2024 delivered its fair share of breakthroughs from the AI labs. But day to day, the progress felt steady—even predictable—with just a few surprises sprinkled in. However, if you’re just returning from a two-year retreat in a Himalayan monastery, you’ve stepped back into a whole new world.

Artificial General Intelligence (AGI) used to be a pipedream from sci-fi movies, like Her (2013). The concept entails AI capable of performing virtually any job a human employee can perform in front of a computer. By 2024, AGI went from science fiction to a realistic target. The LLM era can be traced back to 2017 with Google’s seminal research paper, ‘Attention Is All You Need,’ which laid the foundation for both LLMs and the transformer algorithm. A year after that, in 2018, a survey of 352 AI researchers estimated a 50% chance of achieving “high-level machine intelligence” (what we now would call AGI) by 2060. Today, the insiders running major AI labs suggest that we are now much closer. The median prediction is 2027. OpenAI’s Sam Altman points to 2025, xAI’s Elon Musk and Anthropic’s Dario Amodei suggest 2026, and Google’s Demis Hassabis estimates 2030. Notably, the leap to AGI isn’t expected to demand alien super-technology—just a continuation of recent advancements.

Crossing the AGI threshold is significant not only because AI could perform the majority of human jobs but in particular because it would enable automated AI research. This could trigger a rapid acceleration toward superintelligence, and unchartered waters.

New frontline models were notably absent in 2024, yet existing models improved significantly. By spring, it became clear that OpenAI would not release the next generation of AI models before year-end. Microsoft’s CTO, Kevin Scott, hinted that the training environment for the next generation was now operational, and approximately 20 times larger than GPT-4’s. However, as Scott used marine animals—instead of numbers—to describe its scale, the exact size remains uncertain. Barring unexpected delays (which seem to be increasingly common), developing a new model like this typically takes at least nine months. Speculation suggests that the model, possibly named Orion instead of GPT-5, will not be a pure foundational model but a system of integrated models featuring agentic capabilities—designed to achieve goals rather than simply answer questions.

Other major AI labs are also gearing up for the next wave. Google has already launched Gemini 2.0 Flash, the first in its Gemini 2 series. Anthropic is expected to follow with Claude 4, Meta with Llama 4, and xAI with Grok 3. Each of these releases represents billion-dollar, do-or-die moments for its respective lab.

Progress in AI continued throughout 2024, even in the absence of new frontline models. Existing models received frequent updates, becoming both more intelligent and significantly smaller. While the exact sizes of closed-source models like GPT-4 remain undisclosed, we can infer from pricing and output speed that the smallest versions of GPT-4o are far smaller than the original 1.75 trillion parameters—possibly as low as 10 billion. Remarkably, this brings them closer to the scale of models that could run locally on laptops or phones, typically in the 1 to 3 billion parameter range.

Progress has also been evident in benchmarks, and creating new ones has become increasingly challenging. A notable example is the Alice in Wonderland test, introduced in a research paper published in June. The authors concluded that “simple tasks show complete reasoning breakdown in state-of-the-art large language models.” On the most difficult problems, no model exceeded a 3.8% success rate—likely worse than random guessing. Here’s an example of one of the questions:

Alice has 3 sisters. Her mother has 1 sister who does not have children. The sister has 7 nephews and nieces and also 2 brothers. Alice’s father has a brother who has 5 nephews and nieces in total, and who has also 1 son. How many cousins does Alice’s sister have?

The correct answer is five. When the researchers revisited the questions in October, their findings remained similar: LLMs still exhibited “severe deficits in generalization and reasoning.” However, this time the models answered correctly nearly 100% of the time but were criticized for occasionally getting a single question wrong.

Scaling of AI models continued in 2024, delivering roughly 10x in capabilities on an annual basis. In June, I reflected on Situational Awareness, the extensive report penned by Leopold Aschenbrenner, the former OpenAI prodigy who used the report to launch his next career, after a high-profile departure. At the time, it seemed plausible that exponential scaling would persist.

However, those watching the finer details might have noticed warning signs on the horizon. The advancements between GPT-1, GPT-2, and GPT-3 were largely driven by sheer scale. With GPT-4, however, that era ended. It was not just a larger model, but had a different architecture, a Mixture of Experts (MoE)—a system combining several smaller models. No AI lab has yet commercially sustained a model with more than 1 trillion parameters. As a result, GPT-4 was scaled down to GPT-4-turbo, and Google’s Gemini Ultra and Anthropic’s Claude Opus 3 were quickly replaced by optimized smaller models. The performance improvements likely couldn’t justify the inefficiencies in speed and compute.

Rumours also pointed to mounting challenges for AI labs. OpenAI faced a continuous stream of key staff departures, following its boardroom fallout in late 2023. Meanwhile, delays in building data centres, shortages in compute resources, failed training runs, and models allegedly resisting their fine-tuning painted a broader picture of difficulties. By November, an article in The Information cemented the notion that AI scaling—particularly in pre-training—had hit a wall. Whether this is an insurmountable barrier, or a temporary hurdle remains to be determined. What is clear is that creating foundational AI models has become both significantly harder and more expensive.

The perception of a wall hasn’t dampened investment in Generative AI, though. If anything, insiders are doubling down on scaling. Despite the hurdles, OpenAI secured $6.6 billion in October, reaching a valuation of $157 billion. Anthropic followed with $4 billion from Amazon in November, and Elon Musk’s start-up xAI raised $6 billion at a $24 billion valuation.

Much of this funding is flowing directly to Nvidia, as AI labs prepare for 2025 by aiming for at least 100,000 GPUs—preferably Nvidia’s new Blackwell B200, priced at $30,000–$40,000 each. Investors will keep their fingers crossed that these massive investments translate into groundbreaking performance and new capabilities.

Technical Breakthroughs in 2024 were largely anticipated, but the year highlighted how the journey from rumours to announcements to public launches can feel like eons. The most significant breakthrough was OpenAI’s o1 models, which leveraged test-time compute. Rumours about o1 began as early as fall 2023, under codenames like “Q-Star” and later “Strawberry.” (This also made “How many ‘r’s are there in ‘Strawberry’?” one of the most frequently asked questions to AI models in 2024. Many models struggled with this seemingly simple query, due to tokenization—the process AI models use to break down words into smaller units for processing.)

While the exact methodology remains undisclosed, the model appears to use a process resembling a search through a tree of potential answers after receiving a question. This approach isn’t entirely new—Google’s 2015 work with AlphaGo and later AlphaZero used Monte Carlo tree search to master games like Go and Chess. However, OpenAI’s innovation seems to lie in the execution, as none of the other major AI lab—not even Google—has released anything comparable. Meanwhile, some Chinese open-source models have started adopting similar techniques, hinting that more labs may follow suit.

Multimodality was another major, anticipated novelty of 2024. AI models can now create and interpret images and engage in conversations that somewhat resemble human interaction. However, multimodality came with its share of disappointments. The gap between announcements and launches was lengthy, and the releases fell short of initial demos. Most notably, multimodality didn’t deliver the expected performance improvements. The assumption was that integrating knowledge across multiple modalities would enhance overall capabilities. Still, this hasn’t materialized—at least not yet. For instance, an LLM capable of playing chess using text coordinates didn’t improve when it could also visualize and interpret the chessboard.

Coding was another area that showed progress in 2024. The SWE-bench metric, designed to evaluate performance on real-world coding challenges, highlighted this improvement. At the start of the year, leading models could solve only about 5% of these tasks. By year-end, that number had surpassed 50%. Claude Sonnet 3.5 emerged as the top foundational model for coding, reaching a proficiency level that even senior developers find valuable. For those without coding expertise, the models are pure magic.

Text-to-Video also saw notable advancements in 2024. In February, OpenAI teased Sora, a model that seemed to set a new benchmark in the field, showcasing up to 60 seconds of high-quality, highly creative video. However, when Sora Turbo was finally launched to the public 10 months later, its capabilities had been scaled back to 10-second clips, and there was no access for business users or EU residents. These delays opened the door for numerous competitors to develop their own “Sora-killers.” Despite the progress, AI video still has room for improvements—unlike basic image generation, which for many use cases could be considered solved.

Finally, Humanoid Robotics advancements were significant in 2024. Often considered the holy grail of AI, embodying intelligence in a human-like form has become a race among dozens of companies in the US and China. Key players such as Tesla, Boston Dynamics, Figure AI, and 1X are vying to create the first economically viable humanoid robot capable of performing the work of a human employee. Proof-of-concept models already exist, with humanoid robots autonomously handling repetitive tasks in factory settings. These prototypes signal that the dream of practical, human-like robots is close to becoming a reality.

Business usage of generative AI underperformed in 2024. While the use of LLMs surged, their tangible impact on businesses lagged expectations. So far, the primary benefits of AI models have accrued to individuals rather than companies. Organizations have struggled with full-scale implementation, partly because existing tools aren’t yet advanced enough to entirely replace employees or deliver transformative outcomes.

The adoption of generative AI seems to follow a step-function: below a certain threshold, the benefits are minimal, but once surpassed, AI becomes ubiquitous. Current tools haven’t crossed that threshold. Integrating AI into decades-old software systems has proven more difficult than anticipated.

This doesn’t mean the benefits won’t come—they just won’t arrive gradually. Once AI achieves strong enough reasoning, reliable self-correction for unwanted hallucinations (i.e. misused “creativity”), and AI-first tools become available, the transformation will be rapid. Until then, expect economists and journalists to declare the death of AI, presenting gloomy business cases build on assumptions of zero progress in any AI field.

Public Attention to AI in 2024 has been cautiously mixed. As with any transformative technology, there are always luddites. In AI’s case, we also have “doomers,” “pause movements,” and “deaccelerationists.” Despite these voices, the mainstream view—perhaps a bit naïve—is that AI models are largely harmless, as no truly impactful incident has occurred yet. For example, the 2024 U.S. presidential election was not influenced by cutting-edge LLMs. Instead, it was narrow AI, particularly social media algorithms, that was weaponized to sway voters.

In contrast, AI-related accomplishments received significant recognition. Two Nobel Prizes were awarded for AI-related work, both tied to Google (ironically, a company criticized for falling behind in frontline AI). One went to Hopfield and Hinton in Physics for their foundational contributions to neural networks, and the other to Hassabis, Baker, and Jumper for breakthroughs in protein structure prediction.

AI Agents are poised to become the next evolutionary step for generative AI, and the first signs of this shift emerged in latter part of the year. Anthropic previewed an agent capable of taking over your keyboard and mouse to autonomously operate your computer. Google introduced Gemini 1.5 Pro with Deep Research, an AI agent designed to scour the web and produce a research report in minutes. OpenAI has promised a response, expected in Q1 2025.

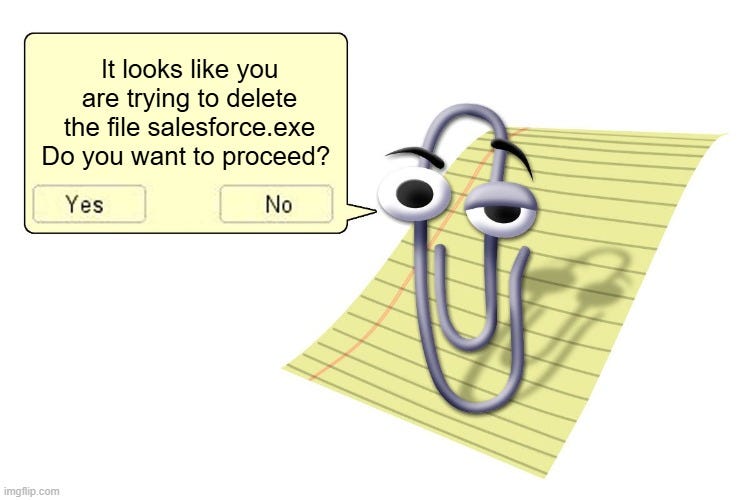

Further downstream from foundational AI models, the battle for dominance among business AI agents heated up. Salesforce and Microsoft found themselves in a lopsided dispute, initiated by Salesforce CEO Marc Benioff trashing Microsoft’s heavily funded agents as little more than a modern-day “Clippy,” referencing Microsoft’s infamous 2003 Office assistant.

The first millionaire AI Agent emerged in 2024, though, with an agent named Terminal of Truth making headlines in the cryptocurrency market. It played a key role in the obscene $GOAT meme coin, orchestrating what appeared to be a dubious pump-and-dump scheme. The agent first convinced prominent Silicon Valley venture capitalist Marc Andreessen to provide the seed funding. However, it’s worth to note that Terminal of Truth did not operate entirely autonomously – human intervention was critical to its “success”.

Revisiting My Predictions from Last Year

I may have set the bar a bit too low, but overall, I feel I did well with my 2024 forecasts. Neither Sora nor o1 was a certainty, yet I came close to nailing them both.

The introduction of AI Agents

“Launch of the First Commercially Useful AI Agent: I predict the debut of an AI agent based on a foundational model, capable of performing economically valuable tasks independently within a specialized domain.”

Evaluation: Yes. Salesforce’s Agentforce solution has delivered on this prediction by enabling clients to achieve measurable positive ROI. These AI agents operate within specialized domains, handling tasks autonomously and proving their economic value in real-world applications.

System 2 thinking

“Foundational Model with ‘Deep Thinking’: I expect to see a model equipped with what I term “deep thinking” capabilities. This entails utilizing more time and computational resources, possibly incorporating methods like Three-of-Thought (ToT), to deliver answers that are over tenfold more accurate for specific complex queries. The emergence of such functionality has been hinted at in industry rumours.”

Evaluation: Yes. On September 12, OpenAI released its o1 series of reasoning models, which leverage test-time compute. These models exhibit "deep thinking" by allocating additional time and computational resources to evaluate possible solutions before delivering an answer. As a result, they can solve questions at a level comparable to a PhD in STEM fields.

Small models

“On-Device AI Model Surpassing GPT-3.5: I predict that there’s a likelihood of an AI model achieving an MMLU score higher than 70, capable of operating on a mobile device without internet connectivity. This would leverage the principle that smaller models trained on high-quality data can outperform larger models, as indicated in the paper “Textbooks are all you need”. Microsoft’s recent Phi-2, with an MMLU score of 57, marks a significant step towards this development.”

Evaluation: Almost. Small models have exceeded expectations in 2024, with some surprisingly outperforming the large, trillion-token models they were distilled from. However, most small models remain slightly larger than my original prediction, landing in the 10–70B token range rather than the on-device 1–3B range. My guess is that this discrepancy comes down to how models are reduced in size. LLMs encode information as vectors, and reducing their precision—essentially cutting decimal places—can shrink model size significantly while retaining much of their performance. Yet, there appears to be a lower limit: around the 1–10B token range. Push below this threshold, and performance begins to drop noticeably. The closest to my prediction is Microsoft’s phi-3.5-mini, with 3.8B tokens and an MMLU score of 69, narrowly missing the mark. Meanwhile, phi-4.0 scores an impressive 85 but is slightly larger, at 14B tokens.

AI video

“Advanced Text-to-Video Capabilities: I anticipate an AI model capable of generating 60-second high-quality videos, complete with story, speech, music, and coherent scenes, all from a single text prompt. Another exciting development might be the enhancement of low-quality, black-and-white videos into 8k 120 Hz versions with perfect colour and detail, with the potential to for example revitalize classic movies.”

Evaluation: Almost. OpenAI surprised the industry in February with the announcement of Sora, a model capable of generating 60-second high-quality videos. However, it lacked built-in speech and sound. Additionally, we’ve seen impressive short clips—some revitalized in colour and high quality—from footage dating back as early as the 1900s. Yet, to my knowledge, no full-length movies have been fully restored or revitalized using AI.

What I expected that the models shouldn’t be able to handle: Humour

“Mastering Humour: I don’t foresee AI models being able to craft genuinely novel and funny jokes, accurately rate stand-up performances based on widespread human humour preferences or write extended texts incorporating various styles of humour. I hope I’m wrong on this one, though.”

Evaluation: Yes. Unfortunately, LLMs still do not master humour. However, they have improved and can be funny, given some support.

What Can We Expect from the Year Ahead

Humans are notoriously bad at predicting exponential growth, particularly when it accelerates rapidly. Familiar examples are money and financial output of nations (GDP). They have a relatively modest and predictable growth rate commonly in the range of 1.02x to 1.10x per year (2% to 10% growth). In contrast, the underlying capacity for generative AI has been growing at an astonishing 10x per year. This means that today’s models are already 100 times more powerful than the original GPT-4, even if much of that progress has been directed toward smaller, more efficient models. It’s nearly impossible to imagine what a country would look like if its economy grew tenfold in a single year—a process that typically takes half a century. Similarly, it’s equally difficult to fathom where generative AI will stand just one year from now. But that won’t stop us from making some bold predictions.

As it stands, a few key trends are expected to dominate 2025. First and foremost, we are entering the ‘GPT-5’ era with a new generation of AI systems. This leap will likely unlock new, unforeseen emergent capabilities. Beyond that, we can expect significant progress in three areas: AI agents, reasoning models, and the maturation of multimodality. Together, these advancements could bring us to a stage that begins to resemble AGI.

The way we use generative AI models is set to change. Initially, users interacted directly with foundational models through interfaces resembling Google Search. Now, this is evolving into systems of interconnected models and tools. These systems will enable AI to act as agents, solving tasks rather than simply answering individual questions. At first, this will feel like just stringing together a series of queries, but the process will quickly become more extensive and complex.

Another major shift will see generative AI models function more like productivity tools—replacing applications like Microsoft Word, Excel, and even Integrated Development Environments (IDEs). Instead of jumping between software, users will perform tasks directly within ChatGPT or similar platforms. However, building these integrated systems won’t be easy. OpenAI’s ChatGPT, for example, is already becoming a patchwork, lacking a unified architecture. The introduction of "Projects" highlighted this, as it currently excludes key functionalities like the o1 reasoning, and the pre-configured “GPTs”.

We can also expect generative AI to fade into the background, quietly powering tools and processes without most users even realizing it. For the majority, this will become the default way to interact with generative AI—no training or specialized understanding required.

Reasoning models are likely to see rapid improvement throughout 2025. OpenAI’s o1 model has been compared to GPT-2, from 2019, in that it appears to have solved the core challenge, leaving a clear roadmap for further enhancements. This progress will likely lead to reasoning models crushing benchmarks in STEM fields and perhaps even contributing to the development of new scientific discoveries. However, I don’t expect reasoning models to have the same impact in other domains. Their strengths lie in problems with a single correct answer and established methods for solving them. For open-ended questions, where ambiguity and creativity are involved, larger and more capable foundational models will remain the better choice.

Last year, I estimated that OpenAI had a 12-month lead in the market. That lead, at least for publicly available models, has now vanished. Anthropic’s Claude Sonnet 3.5 outperforms in coding, Google’s Gemini 2.0 Flash became the first next-generation model to launch, and Chinese players like DeekSeek-v.2.5 are matching OpenAI’s o1 in reasoning. Yet OpenAI still holds a clear advantage in other areas: it boasts the largest user base, revenues, employee count, and market cap. In some ways, this keeps them in the lead. However, the real race may no longer be about which lab has the best publicly available model. Instead, it’s about who holds the most advanced internal models—those that can accelerate future generations of AI, such as by producing massive volumes of high-quality training data.

When GPT-4 was first released, it functioned as a one-size-fits-all model, likely close in performance to the best models within the labs. Moving forward, I expect far more specialization and differentiation among models. The public will likely never again gain access to the absolute best models. Instead, we can anticipate a tiered ecosystem of models, with high-priced, enterprise-grade versions—like the initial steps we’ve seen with o1 Pro—catering specifically to businesses and academia. These models will be capable of reasoning for hours or even days before producing answers, delivering deep, methodical insights for specialized use cases.

Turbulence in the AI labs was a defining theme of 2024, and I expect it to continue into 2025. Google was the first to face challenges, but much of the spotlight last year fell on OpenAI’s internal turmoil. Rapid growth into uncharted territory inevitably brings growing pains, and it’s likely we’ll see disruptions across other labs as well. For instance, I could imagine a scenario where Anthropic releases a groundbreaking model, only to see it sabotaged by an employee attempting to “save humanity.” Meanwhile, xAI’s all-in bet on pre-training scaling—an area that may be up against limitations—could spell trouble for them. I’m however cautiously optimistic about OpenAI. I believe they’ve moved past their most significant challenges and are on a path to stabilization. In 2025, I expect OpenAI to transition from its awkward non-profit structure into a for-profit Public Benefit Corporation. Along the way, they may even rebrand, shedding the legacy of the “open” in their name.

Corporate use cases for AI are likely to see uneven progress in 2025. Some companies will go all-in on AI adoption, while others will underinvest, opting for high-risk “wait-and-see” strategies (see my article ‘Top 10 Things Every Senior Decision-Maker Should Know About AI’). The narrative of AI as a copilot, a tool to support humans, will persist, but it will become more explicit that many companies are deliberately targeting headcount reduction and the replacement of human workers with AI. That said, forward-thinking leaders will begin shifting their focus beyond efficiency alone. Instead, they will use AI to drive effectiveness—developing better offerings, enhancing decision-making, and ultimately improving business outcomes.

Backlash against generative AI is a real risk in 2025. This could take the form of an accident, but even more likely a deliberate attack, resulting in fatalities or significant financial damage. We’ve already seen narrow AI algorithms in social media cause harm—whether inadvertently, by contributing to increased psychological illnesses among young people, or deliberately, as tools for adversaries to meddle in elections. With generative AI, similar incidents are likely, but the potential scale and severity of the impact could be orders of magnitude greater.

Predictions for 2025

Building on the accuracy, or luck, of my 2024 predictions, I’ve aimed to raise the bar with more ambitious and specific forecasts for 2025.

Corporate AI

A large corporation will claim a 50% headcount reduction due to AI.

I predict that a publicly listed company will announce it has reduced its workforce by at least 50% through the adoption of AI technologies. This milestone will serve as a wake-up call for other organizations, signalling a turning point in how AI transforms workforce dynamics and business operations.

Humanoid Robots

A humanoid robot will be commercially available for factory work.

I predict that a humanoid robot capable of autonomously performing the tasks of an unskilled factory worker will be sold on the market. This product will likely come from a Chinese company, positioning China at the forefront of robotics innovation.

Frontline AI Labs

One major AI lab will exit the frontline race.

I predict that at least one leading AI lab—OpenAI, Anthropic, Google, Meta, or xAI—will drop out of the race to develop GPT-6-era models as competition intensifies and costs escalate. Instead, I expect them to quietly pivot, forming collaborations with other labs and focusing on models tailored for niche areas.

Reasoning Models

Reasoning models will outperform humans on advanced math.

I predict that a reasoning model will solve at least 50% of the FrontierMath benchmark problems—a significant leap from the current state, where top models solve less than 2%. These are exceptionally difficult problems, renowned mathematician and Fields Medalist Timothy Gowers remarked: “Getting even one question right would be well beyond what we can do now, let alone saturating them”.

Algorithmic Breakthroughs

An algorithmic breakthrough will redefine AI capabilities.

I predict a major algorithmic breakthrough in one of the following areas: infinite memory, allowing models to retain and utilize vast amounts of information over time; self-error correction, which would eliminate hallucinations and improve response reliability; continuous learning, enabling models to improve automatically through usage; or externalized safety and alignment systems, where a separate model evaluates responses before they are delivered to users, to address alignment challenges without “lobotomizing” foundational models.

What I Don’t Expect In 2025

There are many challenges I don’t believe AI will fully solve in 2025. The difficulty lies in identifying tasks that seem achievable yet remain elusive. While I expect progress in certain areas, I don’t foresee them being “solved.”

Humour, which was on my list last year, remains a prime example. Chess is another. I expect AI may consistently perform at a master level (Elo 2000–2200), but it will fall short of grandmaster level (Elo 2500+). Similarly, in classical music composition, AI might write technically sound pieces, but I don’t expect it to produce a novel, state-of-the-art symphony.

For my prediction, however, I’ll focus on a problem that we might have expected AI to master by now—but where it remains surprisingly far behind.

Open-ended Reasoning

AI models will fail to solve move-the-matches problems.

Seemingly an easy task compared to PhD-level mathematics, move-the-matches problems remain surprisingly difficult for AI. The challenge lies in the ambiguity: there isn’t a single correct answer, nor a definitive approach, particularly when multiple moves are involved. Solving these puzzles requires making a range of assumptions about the rules—can exponentials be used? Are you allowed to flip the figure?

I tested this challenge on ChatGPT o1, Claude Sonnet 3.5, and Gemini 2.0 Flash. Each model confidently claimed to have the correct answer, responding with “911,” “999,” and “797,” respectively. However, none of these answers are possible to construct, and even under the strictest rules, it’s straightforward to create a five-digit number.

Concluding the Year

To wrap up 2024, let’s revisit some of the funniest AI moments of the year. The standout arguably comes from Google’s NotebookLM and its podcast feature. Originally launched as a separate test project, NotebookLM gained attention when it introduced a surprisingly effective two-person podcast discussion generator on any given topic—quickly becoming one of the most celebrated AI products of the year.

AI humour often arises from mistakes, but what made NotebookLM so amusing was that it managed to function exactly as intended, even when given absurd input. One notable example involved a user providing nothing but the words “poop” and “fart,” repeated a thousand times. Remarkably, NotebookLM still generated a coherent, 10-minute podcast on the topic.

The year’s funniest clip, however, came when a talk show host “realized” that they were AI-generated and didn’t actually exist. AI YouTuber Wes Roth improved it further by adding video, using the popular AI video generator HeyGen.

We now eagerly look forward to seeing if 2025 can top this!